Elastic has introduced a new disk-friendly vector search algorithm, called DiskBBQ, to Elasticsearch. According to the company, this new algorithm is more efficient than traditional search techniques in vector databases, like Hierarchical Navigable Small Worlds (HNSW), which is currently the most commonly used technique.

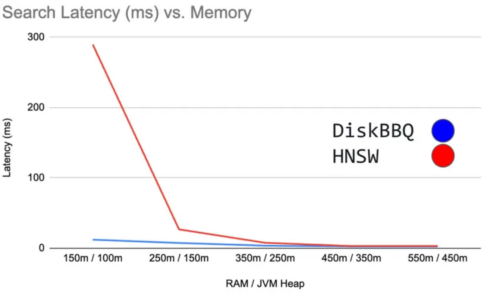

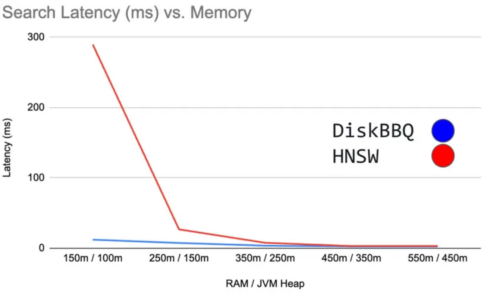

With HNSW, all vectors are required to reside in memory, which increases costs as it scales, while DiskBBQ keeps costs low by eliminating the need to keep entire vector indexes in memory.

The main benefits of this new method are that it uses less RAM, eliminates spikes in data retrieval time, improves performance for data ingestion and organization, and costs less, Elastic explained.

It works by using Hierarchical K-means to partition vectors into small clusters, and then it picks representative centroids to query prior to querying the actual vectors. This means querying at most two layers of the centroids. It then explores the vectors in each cluster by bulk scoring the distance between the cluster’s vector and the query vector.

DiskBBQ also uses Better Binary Quantization (BBQ) to compress the vectors and centroids, allowing many blocks of vectors to be loaded into memory at the same time.

Additionally, it utilizes Google’s Spilling with Orthogonality-Amplified Residuals (SOAR) to assign vectors to more than one cluster, which is useful for situations where a vector is close to the border between two clusters.

“As AI applications scale, traditional vector storage formats force them to choose between slow indexing or significant infrastructure costs required to overcome memory limitations,” said Ajay Nair, general manager of platform at Elastic. “DiskBBQ is a smarter, more scalable approach to high-performance vector search on very large datasets that accelerates both indexing and retrieval.”

DiskBBQ is available in Elasticsearch 9.2. More information about the technique can be found in the company’s blog post.