|

Listen to this article |

NVIDIA CEO Jenson Huang ended his GTC 2024 keynote backed by life size images of all of the various humanoids in development and powered by the Jetson Orin computer. | Credit: Eugene Demaitre

SAN JOSE, Calif. — The NVIDIA GTC 2024 keynote kicked off like a rock concert yesterday at the SAP Arena. More than 15,000 attendees filled the arena in anticipation of CEO Jensen Huang’s annual presentation of the latest product news from NVIDIA.

To build the excitement, the waiting crowd was mesmerized by an interactive and real-time generative art display running live on the main stage screen, driven by the prompts of artist Refik Anadol Dustio.

New foundation for humanoid robotics

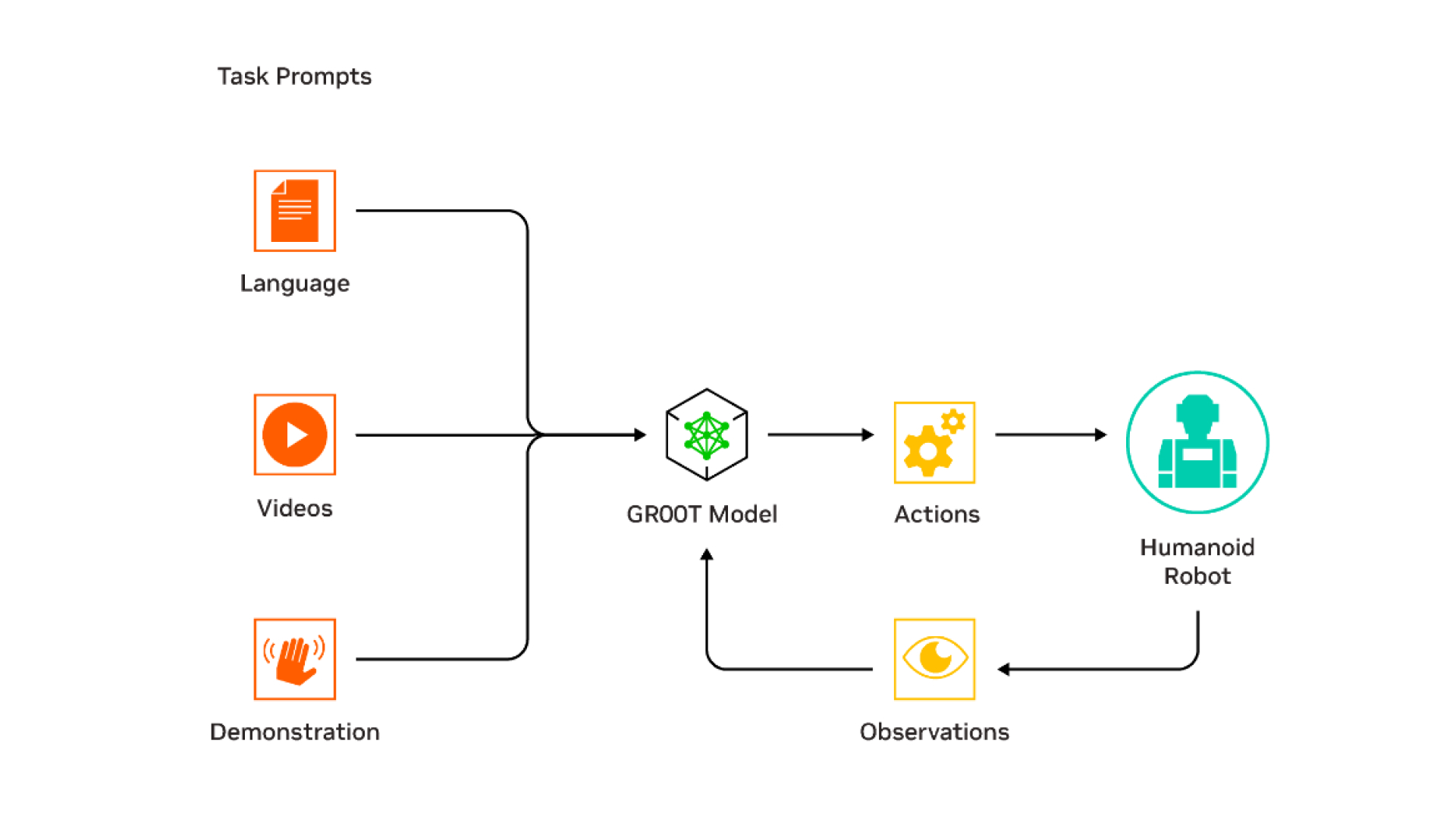

The big news from the robotics side of the house is that NVIDIA launched a new general-purpose foundation model for humanoid robots called Project GR00T. This new model is designed to bring robotics and embodied AI together while enabling the robots to understand natural language and emulate movements by observing human actions.

Project GR00T training model. | Credit: NVIDIA

GR00T stands for “Generalist Robot 00 Technology,” and with the race for humanoid robotics heating up, this new technology is intended to help accelerate development. GR00T is a large multimodal model (LMM) providing robotics developers with a generative AI platform to begin the implementation of large language models (LLMs).

“Building foundation models for general humanoid robots is one of the most exciting problems to solve in AI today,” said Huang. “The enabling technologies are coming together for leading roboticists around the world to take giant leaps towards artificial general robotics.”

GR00T uses the new Jetson Thor

As part of its robotics announcements, NVIDIA unveiled Jetson Thor for humanoid robots, based on the NVIDIA Thor system-on-a-chip (SoC). Significant upgrades to the NVIDIA Isaac robotics platform include generative AI foundation models and tools for simulation and AI workflow infrastructure.

The Thor SoC includes a next-generation GPU based on NVIDIA Blackwell architecture with a transformer engine delivering 800 teraflops of 8-bit floating-point AI performance. With an integrated functional safety processor, a high-performance CPU cluster, and 100GB of Ethernet bandwidth, it can simplify design and integration efforts, claimed the company.

Project GR00T, a general-purpose multimodal foundation model for humanoids, enables robots to learn different skills. | Credit: NVIDIA

NVIDIA showed humanoids in development with its technologies from companies including 1X Technologies, Agility Robotics, Apptronik, Boston Dynamics, Figure AI, Fourier Intelligence, Sanctuary AI, Unitree Robotics, and XPENG Robotics.

“We are at an inflection point in history, with human-centric robots like Digit poised to change labor forever,” said Jonathan Hurst, co-founder and chief robot officer at Agility Robotics. “Modern AI will accelerate development, paving the way for robots like Digit to help people in all aspects of daily life.”

“We’re excited to partner with NVIDIA to invest in the computing, simulation tools, machine learning environments, and other necessary infrastructure to enable the dream of robots being a part of daily life,” he said.

NVIDIA updates Isaac simulation platform

The Isaac tools that GR00T uses are capable of creating new foundation models for any robot embodiment in any environment, according to NVIDIA. Among these tools are Isaac Lab for reinforcement learning, and OSMO, a compute orchestration service.

Embodied AI models require massive amounts of real and synthetic data. The new Isaac Lab is a GPU-accelerated, lightweight, performance-optimized application built on Isaac Sim for running thousands of parallel simulations for robot learning.

NVIDIA software — Omniverse, Metropolis, Isaac and cuOpt — combine to create an ‘AI gym’

where robots, AI agents can work out and be evaluated in complex industrial spaces. | Credit: NVIDIA

To scale robot development workloads across heterogeneous compute, OSMO coordinates the data generation, model training, and software/hardware-in-the-loop workflows across distributed environments.

NVIDIA also announced Isaac Manipulator and Isaac Perceptor — a collection of robotics-pretrained models, libraries and reference hardware.

Isaac Manipulator offers dexterity and modular AI capabilities for robotic arms, with a robust collection of foundation models and GPU-accelerated libraries. It can accelerate path planning by up to 80x, and zero-shot perception increases efficiency and throughput, enabling developers to automate a greater number of new robotic tasks, said NVIDIA.

Among early ecosystem partners are Franka Robotics, PickNik Robotics, READY Robotics, Solomon, Universal Robots, a Teradyne company, and Yaskawa.

Isaac Perceptor provides multi-camera, 3D surround-vision capabilities, which are increasingly being used in autonomous mobile robots (AMRs) adopted in manufacturing and fulfillment operations to improve efficiency and worker safety. NVIDIA listed companies such as ArcBest, BYD, and KION Group as partners.

Learn from Agility Robotics, Amazon, Disney, Teradyne and many more.

Learn from Agility Robotics, Amazon, Disney, Teradyne and many more.

‘Simulation first’ is the new mantra for NVIDIA

A simulation-first approach is ushering in the next phase of automation. Real-time AI is now a reality in manufacturing, factory logistics, and robotics. These environments are complex, often involving hundreds or thousands of moving parts. Until now, it was a monumental task to simulate all of these moving parts.

NVIDIA has combined software such as Omniverse, Metropolis, Isaac, and cuOpt to create an “AI gym” where robots and AI agents can work out and be evaluated in complex industrial spaces.

Huang demonstrated a digital twin of a 100,000-sq.-ft, warehouse — built using the NVIDIA Omniverse platform for developing and connecting OpenUSD applications — operating as a simulation environment for dozens of digital workers and multiple AMRs, vision AI agents, and sensors.

Each mobile robot, running the NVIDIA Isaac Perceptor multi-sensor stack, can process visual information from six sensors, all simulated in the digital twin.

Image depicting AMR and a manipulator working together to

enable AI-based automation in a warehouse powered by NVIDIA Isaac. | Credit: NVIDIA

At the same time, the NVIDIA Metropolis platform for vision AI can create a single centralized map of worker activity across the entire warehouse, fusing data from 100 simulated ceiling-mounted camera streams with multi-camera tracking. This centralized occupancy map can help inform optimal AMR routes calculated by the NVIDIA cuOpt engine for solving complex routing problems.

cuOpt, an optimization AI microservice, solves complex routing problems with multiple constraints using GPU-accelerated evolutionary algorithms.

All of this happens in real-time, while Isaac Mission Control coordinates the entire fleet using map data and route graphs from cuOpt to send and execute AMR commands.

NVIDIA DRIVE Thor for robot axis

The company also announced NVIDIA DRIVE Thor, which now supersedes NVIDIA DRIVE Orin as a SoC for autonomous driving applications.

Multiple autonomous vehicles are using NVIDA architectures, including robotaxis and autonomous delivery vehicles from companies including Nuro, Xpeng, Weride, Plus, and BYD.