Around 50% of attendees to KubeCon in Salt Lake City will be first-timers. If that’s you: welcome, it’s gonna be an awesome show.

Like thousands of others in businesses around the world, you’ve kicked the tires on K8s and decided that it’s worth committing to, at least enough to justify the cost of a week in SLC. You’re on site to scope out technologies and vendors and learn best practices as you put Kubernetes into production in some shape or form.

So here’s the no-nonsense advice you need to make your next 12 months hurt less.

1. DIY does not work at scale

If you’re serious about Kubernetes, the data says you will end up with tens or hundreds of clusters. You need them to look and behave the same, consistently, otherwise you’ll drive yourself mad with troubleshooting and policy violations. You need the ability to stand a new cluster up for a new requirement in minutes, not weeks, or you’ll be very unpopular with your app dev teams.

We all love rolling up our sleeves and tinkering, and when you were learning K8s principles and building your first cluster (‘the hard way’ or not), that’s the right way to do it. You’re in there, writing scripts, wrangling kubectl, tweaking yaml.

Yes, there are companies out there that rolled their own Kubernetes ‘management platform’ over the past six or seven years, and got it working pretty well. If you asked them over a beer what they’d do if they were starting afresh today, most of them would do it differently. They would look for an easy way.

Learn from them: you need repeatable templates and push-button automation, but it probably doesn’t make sense to DIY your own tooling to do that.

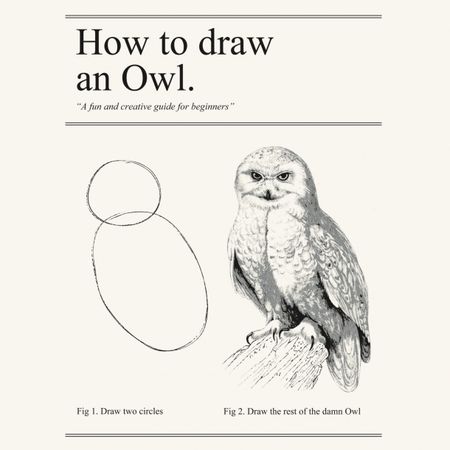

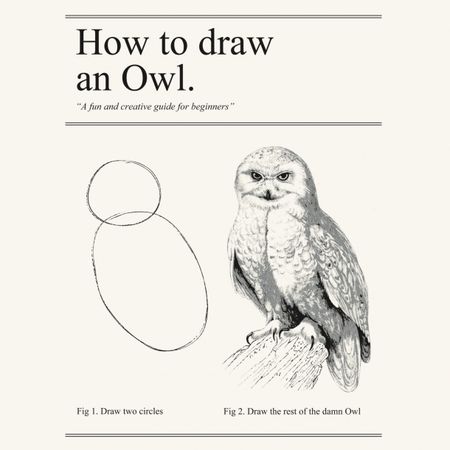

2. Building the cluster is the easy bit

K8s beginners naturally focus on getting their first clusters up and running, and the end goal is seeing their handful of nodes in a ‘ready’ state. Yes, it’s challenging — but believe it or not, it’s the easy bit.

Now you’ve got to build the rest of the enterprise-grade stack, everything from load balancers to secrets management, logging and observability. In meme parlance, it’s “the rest of the ****ing owl”.

Oh, and you need to patch, upgrade, scale, reconfigure, secure, monitor and troubleshoot that full stack. At scale. Frequently. Forever.

Unless you are blessed with unlimited headcount or very patient internal customers, you probably need to look at automation for this part, too. You’re not looking for a build tool — you’re looking for fleet lifecycle management.

One of our customers is well on their journey to enterprise-wide Kubernetes, primarily on-prem, and in a highly regulated industry. Last week we interviewed him (on condition of anonymity) about his journey, and he explained how this realization hit him, too:

“I didn’t know what my team size was going to be, and at that point it was just me, and I wasn’t going to go around manually building 60 clusters or 600 clusters. There’s no way I could do that. I’d be spending all my time doing it.

“If we’re going to do this and be able to reliably create clusters the same way at scale, we cannot be doing it by hand. So I wanted to build a platform that was mostly automated.

“We need not only automation to create the clusters, but we also need to make sure that they’re maintained and updated. Someone’s got to sit in the chair for hours and do that. And that’s what led us down the path of trying to find an enterprise container management solution.”

3. Prepare for your future, today

For a decade now, Kubernetes has been surprising us all with its versatility and extensibility, with custom resources and operators and the power of the K8s API.

You may have just a few mainstream use cases today, likely self-service ‘Kubernetes as a Service’ (KaaS) in the cloud or virtualized data center. But who knows what the future holds for K8s in your business?

- Maybe you’ll start looking to K8s as a way to modernize your VM workloads, as well as orchestrating containers.

- Perhaps your environment needs will change: if you need to deploy clusters at the edge, on bare metal, in different clouds — can your current toolset do it?

- And what happens if one of your favored projects, Linux OSs or distributions changes license or gets abandoned — how hard is it to swap out?

You can’t predict the future, but you can certainly prepare for it: protecting your agency and freedom of choice.

So make your tech stack decisions today to protect the freedom of ‘future you’. Watch out for highly opinionated services and toolsets that will lock you in. But equally, remember that DIY won’t be the easy answer in any of these situations.

Don’t be afraid to follow your unique journey

We work with dozens and dozens of enterprises, from defense contractors to pharma manufacturers, small software vendors to the biggest telcos. Every one of them has the same basic pains — they need to make it safe and easy to design, deploy and manage Kubernetes clusters to run enterprise applications. But every one of them is also unique!

Some are running small form-factor edge devices in airgapped environments with high security. Some are spinning up clusters in the cloud for dev teams. Some have crazy network setups and proxies, or complex integrations with existing tooling like ServiceNow and enterprise identity providers. Some have big, highly expert teams, others just have one or two people working on Kubernetes.

So when you’re standing in the hall with thousands of other K8s enthusiasts, don’t get swept away by the cool stuff. Look for those that can help you navigate your own, unique path to business results. And enjoy the ride! We’ll be glad to talk and share some pointers all week at KubeCon at booth J8.

To learn more about the current state of enterprise Kubernetes, and how organizations are strategizing for the future, check out Spectro Cloud’s State of Production Kubernetes research report.

To learn more about Kubernetes and the cloud native ecosystem, join us at KubeCon + CloudNativeCon North America, in Salt Lake City, Utah, on November 12-15, 2024.