Sanctuary AI is one of the world’s leading humanoid robotics companies. Its Phoenix robot, now in its seventh generation, has dropped our jaws several times in the last few months alone, demonstrating a remarkable pace of learning and a fluidity and confidence of autonomous motion that shows just how human-like these machines are becoming.

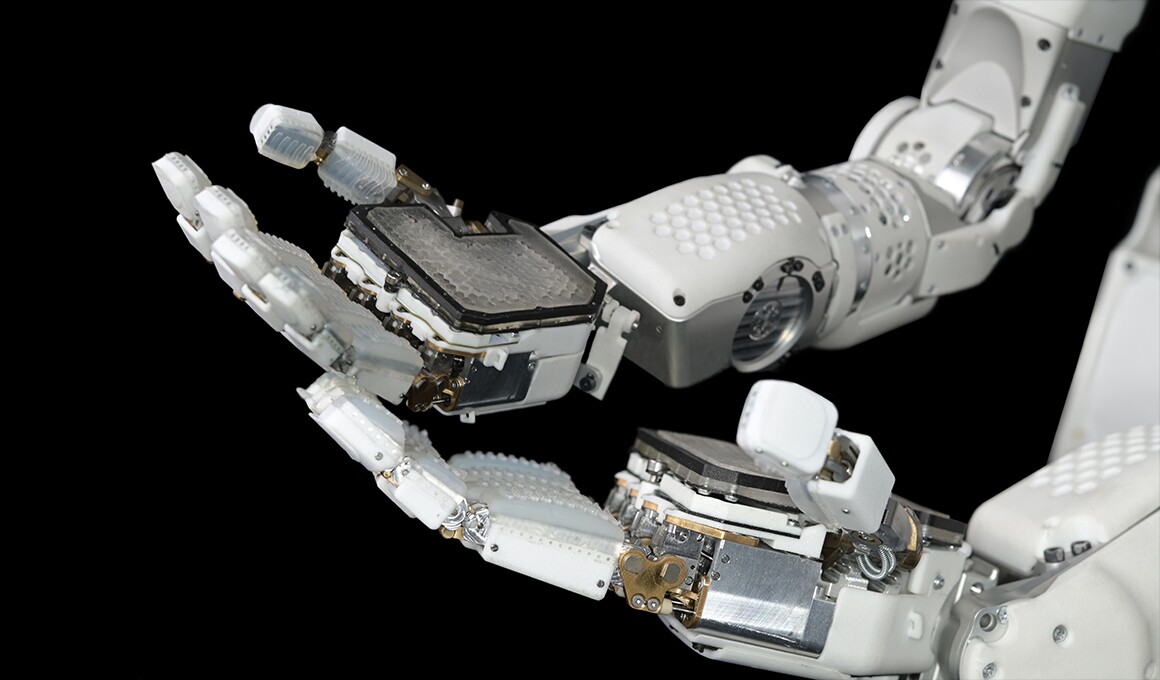

Check out the previous version of Phoenix in the video below – its micro-hydraulic actuation system gives it a level of power, smoothness and quick precision unlike anything else we’ve seen to date.

Powered by Carbon, Phoenix is now autonomously completing simple tasks at human-equivalent speed. This is an important step on the journey to full autonomy. Phoenix is unique among humanoids in its speed, precision, and strength, all critical for industrial applications. pic.twitter.com/bYlsKBYw3i

— Geordie Rose (@realgeordierose) February 28, 2024

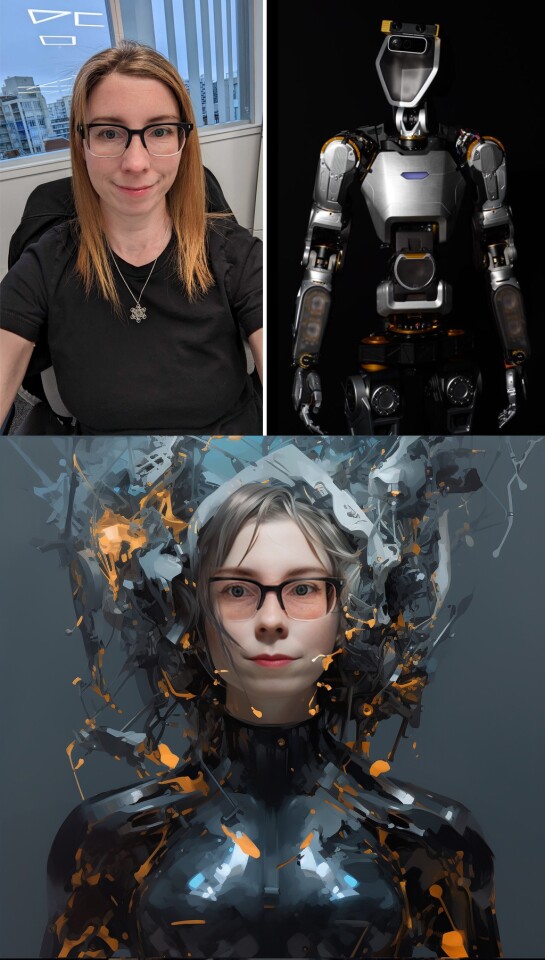

Gildert has spent the last six years with Sanctuary at the bleeding edge of embodied AI and humanoid robotics. It’s an extraordinary position to be in at this point; prodigious amounts of money have started flowing into the sector as investors realize just how close a general-purpose robot might be, how massively transformative it could be for society, and the near-unlimited cash and power these things could generate if they do what it says on the tin.

And yet, having been through the tough early startup days, she’s leaving – just as the gravy train is rolling into the station.

“It is with mixed emotions,” writes CEO Geordie Rose in an open letter to the Sanctuary AI team, “that we announce that our co-founder and CTO Suzanne has made the difficult decision to move on from Sanctuary. She helped pioneer our technological approach to AI in robotics and worked with Sanctuary since our inception in 2018.

“Suzanne is now turning her full time attention to AI safety, AI ethics, and robot consciousness. We wish her the best of success in her new endeavors and will leave it to her to share more when the time’s right. I know she has every confidence in the technology we are developing, the people we have assembled, and the company’s prospects for the future.”

Gildert has made no secret of her interest in AI consciousness over the years, as evidenced in this video from last year, in which she speaks of designing robot brains that can “experience things in the same way the human mind does.”

The first step to building Carbon (our AI operating and control system) within a general-purpose robot, would be to first understand how the human brain works.

Our Co-founder and CTO @suzannegildert explains that by using experiential learning techniques, Sanctuary AI is… pic.twitter.com/U4AfUl6uhX

— Sanctuary AI (@TheSanctuaryAI) December 1, 2023

Now, there have been certain leadership transitions here at New Atlas as well – namely, I’ve stepped up to lead the Editorial team, which I mention solely as an excuse for why we haven’t released the following interview earlier. My bad!

But in all my 17 years at Gizmag/New Atlas, this stands out as one of the most fascinating, wide ranging and fearless discussions I’ve had with a tech leader. If you’ve got an hour and 17 minutes, or a drive ahead of you, I thoroughly recommend checking out the full interview below on YouTube.

Interview: Former CTO of Sanctuary AI on humanoids, consciousness, AGI, hype, safety and extinction

We’ve also transcribed a fair whack of our conversation below if you’d prefer to scan some text. A second whack will follow, provided I get the time – but the whole thing’s in the video either way! Enjoy!

On the potential for consciousness in embodied AI robots

Loz: What’s the world that you’re working to bring about?

Suzanne Gildert: Good question! I’ve always been sort of obsessed with the mind and how it works. And I think that every time we’ve added more minds to our world, we’ve had more discoveries made and more advancements made in technology and civilization.

So I think having more intelligence in the world in general, more mind, more consciousness, more awareness is something that I think is good for the world in general, I guess that’s just my philosophical view.

So obviously, you can create new human minds or animal minds, but also, can we create AI minds to help populate not just the world with more intelligence and capability, but the other planets and stars? I think Max Tegmark said something like we should try and fill the universe with consciousness, which is, I think, a kind of grand and interesting goal.

Sanctuary AI

This idea of AGI, and the way we’re getting there at the moment through language models like GPT, and embodied intelligence in robotics like what you guys are doing… Is there a consciousness at the end of this?

That’s a really interesting question, because I sort of changed my view on this recently. So it’s fascinating to get asked about this as my view on it shifts.

I used to be of the opinion that consciousness is just something that would emerge when your AI system was smart enough, or you had enough intelligence and the thing started passing the Turing test, and it started behaving like a person… It would just automatically be conscious.

But I’m not sure I believe that anymore. Because we don’t really know what consciousness is. And the more time you spend with robots running these neural nets, and running stuff on GPUs, it’s kind of hard to start thinking about that thing actually having a subjective experience.

We run GPUs and programs on our laptops and computers all the time. And we don’t assume they’re conscious. So what’s different about this thing?

It takes you into spooky territory.

It’s fascinating. The stuff we, and other people in this space, do is not only hardcore science and machine learning, and robotics and mechanical engineering, but it also touches on some of these really interesting philosophical and deep topics that I think everyone cares about.

It’s where the science starts to run out of explanations. But yes, the idea of spreading AI out through the cosmos… They seem more likely to get to other stars than we do. You kind of wish there was a humanoid on board Voyager.

Absolutely. Yeah, I think it’s one thing to send, sort of dumb matter out there into space, which is kind of cool, like probes and things, sensors, maybe even AIs, but then to send something that’s kind of like us, that’s sentient and aware and has an experience of the world. I think it’s a very different matter. And I’m much more interested in the second.

Sanctuary AI

On what to expect in the next decade

It’s interesting. The way artificial intelligence is being built, it’s not exactly us, but it’s of us. It’s trained using our output, which is not the same as our experience. It has the best and the worst of humanity inside it, but it’s also an entirely different thing, these black boxes, Pandora’s boxes with little funnels of communication and interaction with the real world.

In the case of humanoids, that’ll be through a physical body and verbal and wireless communication; language models and behavior models. Where does that take us in the next 10 years?

I think we’ll see a lot of what looks like very incremental progress at the start, then it will sort of explode. I think anyone who’s been following the progress of language models, over the last 10 years will attest to this.

10 years ago, we were playing with language models and they could generate something on the level of a nursery rhyme. And it went on like that for a long time, people didn’t think it would get beyond that stage. But then with internet scale data, it just suddenly exploded, it went exponential. I think we’ll see the same thing with robot behavior models.

So what we’ll see is these really early little building blocks of movement and motion being automated, and then becoming commonplace. Like, a robot can move a block, stack a block, like maybe pick something up, press a button, but It’s kind of still ‘researchy.’

But then at some point, I think it goes beyond that. And it will, it will happen very radically and very rapidly, and it will suddenly explode into robots being able to do everything, seemingly out of nowhere. But if you actually track it, it’s one of these predictable trends, just with the scale of data.

On Humanoid robot hype levels

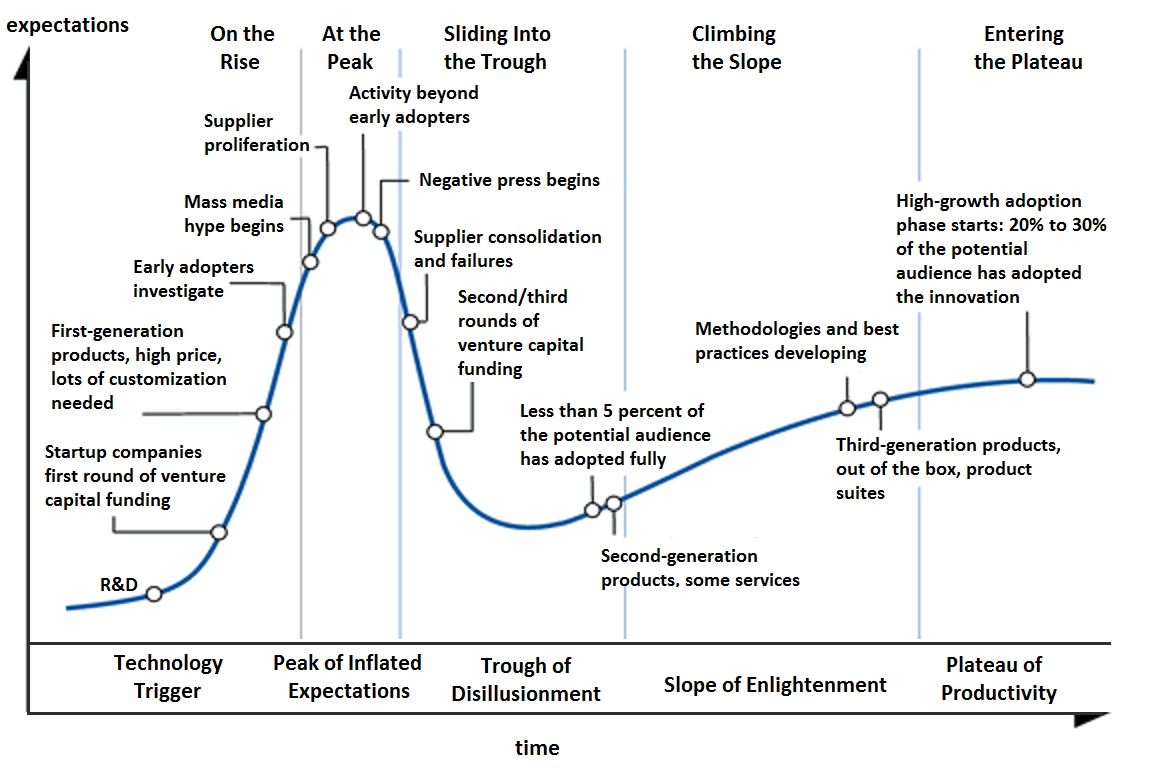

Where do humanoids sit on the old Gartner Hype Cycle, do you think? Last time I spoke to Brett Adcock at Figure, he surprised me by saying he doesn’t think that cycle will apply to these things.

I do think humanoids are kind of hyped at the moment. So I actually think we’re kind of close to that peak of inflated expectations right now, I actually do think there may be a trough of disillusionment that we fall into. But I also think we will probably climb out of it pretty quickly. So it probably won’t be the long, slow climb like what we’re seeing with VR, for example.

But I do still think there’s a while before these things take off completely. And the reason for that is the scale of the data you need, to really make these models run in a general-purpose mode.

With large language models, data was kind of already available, because we had all the text on the internet. Whereas with humanoid, general-purpose robots, the data is not there. We’ll have some really interesting results on some simple tasks, simple building blocks of motion, but then it won’t go anywhere until we radically upscale the data to be… I don’t know, billions of training examples, if not more.

So I think that by that point, there will be a kind of a trough of ‘oh, this thing was supposed to be doing everything in a couple of years.’ And it’s just because we haven’t yet collected the data. So we will get there in the end. But I think people may be expecting too much too soon.

I shouldn’t be saying this, because we’re, like, building this technology, but it’s just the truth.

It’s good to set realistic expectations, though; Like, they’ll be doing very, very basic tasks when they first hit the workforce.

Yeah. Like, if you’re trying to build a general purpose intelligence, you have to have seen training examples from almost anything a person can do. People say, ‘oh, it can’t be that bad, by the time you’re 10, you can basically manipulate kind of anything in the world, any machine or any objects, things like that. We won’t take that long to get that with training days.’

But what we forget is our brain was already pre-evolved. A lot of that machinery is already baked in when we’re born, so we didn’t learn everything from scratch, like an AI algorithm – we have billions of years of evolution as well. You have to factor that in.

I think the amount of data needed for a general purpose AI in a humanoid robot that knows everything that we know… It’s going to be like evolutionary timescale amounts of data. I’m making it sound worse than it is, because the more robots you can get out there, the more data you can collect.

And the better they get, the more robots you want, and it’s kind of a virtuous cycle once it gets going. But I think there is going to be a good few years more before that cycle really starts turning.

Sanctuary AI Unveils the Next Generation of AI Robotics

On embodied AIs as robotic infants

I’m trying to think what that data gathering process might look like. You guys at Sanctuary are working with teleoperation at the moment. You wear some sort of suit and goggles, you see what the robot sees, and you control its hands and body, and you do the task.

It learns what the task is, and then goes away and creates a simulated environment where it can try that task a thousand, or a million times, make mistakes, and figure out how to do it autonomously. Does this evolutionary-scale data gathering project get to a point where they can just watch humans doing things, or will it be teleoperation the whole way?

I think the easiest way to do it is the first one you mentioned, where you’re actually training multiple different foundational models. What we’re trying to do at Sanctuary is learn the basic atomic kind of constituents of motion, if you like. So the basic ways in which the body and the hands move in order to interact with objects.

I think once you’ve got that, though, you’ve sort of created this architecture that’s a little bit like the motor memory and the cerebellum in our brain. The part that turns brain signals into body signals.

I think once you’ve got that, you can then hook in a whole bunch of other models that come from things like learning, from video demonstration, hooking in language models, as well. You can leverage a lot of other types of data out there that aren’t pure teleoperation.

But we believe strongly that you need to get that foundational building block in place, of having it understand the basic types of movements that human-like bodies do, and how those movements coordinate. Hand-eye coordination, things like that. So that’s what we’re focused on.

Now, you can think of it as kind of like a six month old baby, learning how to move its body in the world, like a baby in a stroller, and it’s got some toys in front of it. It’s just kind of learning like, where are they in physical space? How do I reach out and grab one? What happens if I touch it with one finger versus two fingers? Can I pull it towards me? These kind of basic things that babies just innately learn.

I think it’s like the point we’re at with these robots right now. And it sounds very basic. But it’s those building blocks that then are used to build up everything we do later in life and in the world of work. We need to learn those foundations first.

Eminent .@DavidChalmers42 on consciousness: “It’s impossible for me to be believe [it] is an illusion…maybe it actually protects for us to believe that consciousness is an illusion. It’s all part of the evolutionary illusion. So that’s part of the charm.” .@brainyday pic.twitter.com/YWzuB7aVh8

— Suzanne Gildert (@suzannegildert) April 28, 2024

On how to stop scallywags from ‘jailbreaking’ humanoids the way they do with LLMs

Anytime that there’s a new GPT or Gemini or whatever gets released, the first thing people do is try to break the guardrails. They try to get it to say rude words, they try and get it to do all the things it’s not supposed to do. They’re going to do the same with humanoid robots.

But the equivalent with an embodied robot… It could be kind of rough. Do you guys have a plan for that sort of thing? Because it seems really, really hard. We’ve had these language models now out in the world getting played with by cheeky monkeys for for a long time, and there are still people finding ways to get them to do things they’re not supposed to all the time. How the heck do you put safeguards around a physical robot?

That’s just a really good question. I don’t think anyone’s ever asked me that question before. That’s cool. I like this question. So yeah, you’re absolutely right. Like one of the reasons that large language models have this failure mode is because they are mostly trained end to end. So you could just send in whatever text you want, you get an answer back.

If you trained robots end to end in this way, you had billions of teleoperation examples, and the verbal input was coming in and action was coming out and you just trained one giant model… At that point, you could say anything to the robot – you know, smash the windows on all these cars on the street. And the model, if it was truly a general AI, would know exactly what that meant. And it would presumably do it if that had been in the training set.

So I think there are two ways you can avoid this being a problem. One is, you never put data in the training set that would have it exhibit the kind of behaviors that you wouldn’t want. So the hope is that if you can make the training data of the type that’s ethical and moral… And obviously, that’s a subjective question as well. But whatever you put into training data is what it’s going to learn how to do in the world.

So maybe not thinking about literally like if you asked it to smash a car window, it’s just going to do… whatever it has been shown is appropriate for a person to do in that situation. So that’s kind of one way of getting around it.

Just to take the devil’s advocate part… If you’re gonna connect it to external language models, one thing that language models are really, really good at doing is breaking down an instruction into steps. And that’ll be how language and behavior models interact; you’ll give the robot an instruction, and the LLM will create a step by step way to make the behavior model understand what it needs to do.

So, to my mind – and I’m purely spitballing here, so forgive me – but in that case it’d be like, I don’t know how to smash something. I’ve never been trained on how to smash something. And a compromised LLM would be able to tell it. Pick up that hammer. Go over here. Pretend there’s a nail on the window… Maybe the language model is the way through which a physical robot might be jailbroken.

It kinda reminds me of the movie Chappie, he won’t shoot a person because he knows that’s bad. But the guy says something like ‘if you stab someone, they just go to sleep.’ So yeah, there are these interesting tropes in sci-fi that are played around a little bit with some of these ideas.

Yeah, I think it’s an open question, how do we stop it from just breaking down a plan into units that themselves have never been seen to be morally good or bad in the training data? I mean, if you take an example of, like, cooking, so in the kitchen, you often cut things up with a knife.

So a robot would learn how to do that. That’s a kind of atomic action that could then technically be applied in a in a general way. So I think it’s a very interesting open question as we move forward.

Suzanne Gildert

I think in the short term, people are going to get around this is by limiting the kind of language inputs that get sent into the robot. So essentially, you are trying to constrain the generality.

So the robot can use general intelligence, but it can only do very specific tasks with it, if you see what I mean? A robot will be deployed into a customer situation, say it has to stock shelves in a retail environment. So maybe at that point, no matter what you say to the robot, it will only act if it hears certain commands are about things that it’s supposed to be doing in its work environment.

So if I said to the robot, take all the things off the shelf and throw them on the floor, it wouldn’t do that. Because the language model would kind of reject that. It would only accept things that sound like, you know, put that on the shelf properly…

I don’t want to say that there’s a there’s a solid answer to this question. One of the things that we’re going to have to think very carefully about over the next five to 10 years as these general models start to come online is how do we prevent them from being… I don’t want to say hacked, but misused, or people trying to find loopholes in them?

I actually think though, these loopholes, as long as we avoid them being catastrophic, can be very illuminating. Because if you said something to a robot, and it did something that a person would never do, then there’s an argument that that’s not really a true human-like intelligence. So there’s something wrong with the way you’re modeling intelligence there.

So to me, that’s an interesting feedback signal of how you might want to change the model to attack that loophole, or that problem you found in it. But this is like I’m always saying when I talk to people now, this is why I think robots are going to be in research labs, in very constrained areas when they are deployed, initially.

Because I think there will be things like this, that are discovered over time. Any general-purpose technology, you can never know exactly what it’s going to do. So I think what we have to do is just deploy these things very slowly, very carefully. Don’t just go putting them in any situation straightaway. Keep them in the lab, do as much testing as you can, and then deploy them very carefully into positions maybe where they’re not initially in contact with people, or they’re not in situations where things could go terribly wrong.

Let’s start with very simple things that we’d let them do. Again, a bit like children. If you were, you know, giving your five year old a little chore to do so they could earn some pocket money, you’d give them something that was pretty constrained, and you’re pretty sure nothing’s gonna go terribly wrong. You give them a little bit of independence, see how they do, and sort of go from there.

I’m always talking about this: nurturing or bringing up AIs like we bring up children. Sometimes you have to give them a little bit of independence and trust them a bit, move that envelope forward. And then if something bad happens… Well, hopefully it’s not too catastrophic, because you only gave them a little bit of independence. And then we’ll start understanding how and where these models fail.

Do you have kids of your own?

I don’t, no.

Because that would be a fascinating process, bringing up kids while you’re bringing up infant humanoids… Anyway, one thing that gives me hope is that you don’t generally see GPT or Gemini being naughty unless people have really, really tried to make that happen. People have to work hard to fool them.

I like this idea that you’re kind of building a morality into them. The idea that there are certain things humans and humanoids alike just won’t do. Of course, the trouble with that is that there are certain things certain humans won’t do… You can’t exactly pick the personality of a model that’s been trained on the whole of humanity. We contain multitudes, and there’s a lot of variation when it comes to morality.

On multi-agent supervision and human-in-the-loop

Another part of it is this sort of semi-autonomous mode that you can have, where you have human oversight at a high level of abstraction. So a person can take over at any point. So you have an AI system that oversees a fleet of robots, and detects that something different is happening, or something potentially dangerous might be happening, and you can actually drop back to having a human teleoperator in the loop.

We use that for edge case handling because when our robot deploys, we want the robot to be collecting data on the job and actually learning on the job. So it’s important for us that we can switch the mode of the robot between teleoperation and autonomous mode on the fly. That might be another way of helping maintain safety, having multiple operators in the loop watching everything while the robot’s starting out its autonomous journey in life.

Another way is to integrate other kinds of reasoning systems. Rather than something like a large language model – which is a black box, you really don’t know how it’s working – some symbolic logic and reasoning systems from the 60s through to the 80s and 90s do allow you to trace how a decision is made. I think there’s still a lot of good ideas there.

But combining these technologies is not easy… It’d be cool to have almost like a Mr. Spock – this analytical, mathematical AI that’s calculating the logical consequences of an action, and that can step in and stop the neural net that’s just sort of learned from whatever it’s been shown.

Enjoy the entire interview in the video below – or stay tuned for Suzanne Gildert’s thoughts on post-labor societies, extinction-level threats, the end of human usefulness, how governments should be preparing for the age of embodied AI, and how she’d be proud if these machines managed to colonize the stars and spread a new type of consciousness.

Interview: Former CTO of Sanctuary AI on humanoids, consciousness, AGI, hype, safety and extinction

Source: Sanctuary AI