I write this blog each month to help navigate through the intense pace of news and innovation we’re releasing for customers. And what a year we’ve had! We witnessed incredible advances at breathtaking speed as AI reshaped what’s possible across industries, organizations, and our day-to-day lives.

Personally, I’m loving the features and capabilities of Copilot for Microsoft 365, especially meeting summaries after a Teams call so I can quickly access the most important points and any actions. We’re still experimenting with AI to craft marketing narratives across my team and it’s exciting to see how AI enhances our own creativity.

AI technology is not brand new. It’s been improving experiences across applications and business processes for some time but, the broad availability of generative AI models and tools this year was a watershed moment.

Customers and partners made quick pivots to bring AI into their transformation roadmaps, and within months were deploying applications and services powered by AI. I have never seen a new technology generate such profound change at this pace. It shows how ready many organizations were for this moment; how investments made in the cloud, data, DevOps, and creating transformation cultures set the stage for AI adoption. This year Microsoft introduced hundreds of resources, models, services, and tools to help customers and partners maximize AI.

This month, I’m pleased to expand this blog’s scope and bring in what’s new for digital applications for a holistic look at everything we’re delivering to help customers modernize their data estate, build intelligent applications, and apply AI technologies to help achieve their business goals. Let’s go.

New models and multimodal capabilities available in Azure AI

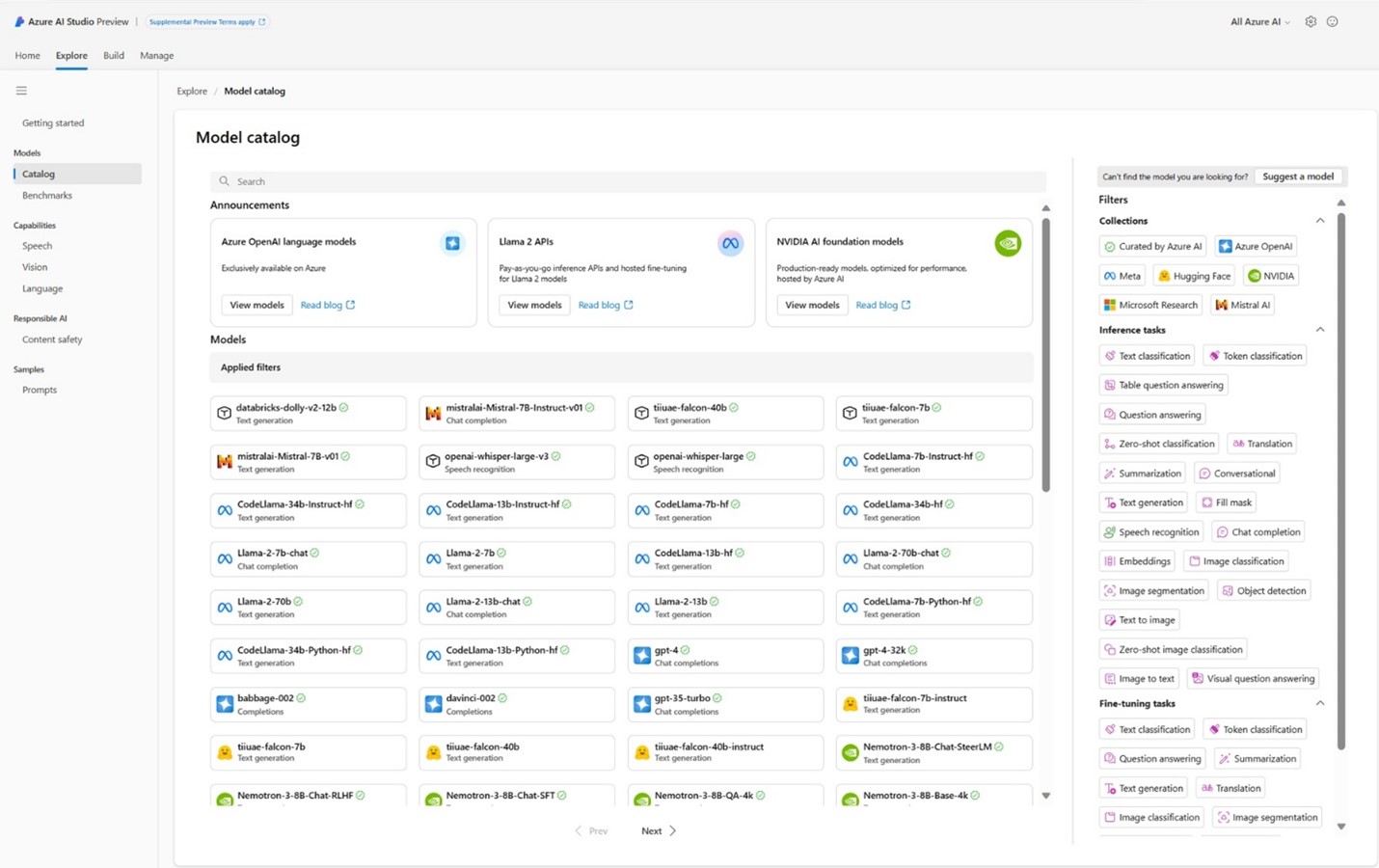

Our focus is to deliver the most cutting-edge open and frontier models available so developers can build with confidence and unlock immediate value across their organization.

Last month we announced a significant expansion of Azure OpenAI Service and introduced Models as a Service (MaaS). This is a way for model providers to offer their latest open and frontier LLMs on Azure for generative AI developers to integrate into their applications.

And just last week, we announced the availability of MaaS for Llama 2. With MaaS for Llama 2, developers can integrate with their favorite LLM tools like Prompt Flow, Semantic Kernel, and LangChain with a ready-to-use API and pay-as-you go billing based on tokens for LLMs. This allows generative AI developers to access Llama 2 via hosted fine-tuning without provisioning GPUs, greatly simplifying the model set up and deployment process. Then they can offer their custom applications utilizing Llama 2, purchased through and hosted on the Azure Marketplace. See John Montgomery’s blog post on this announcement for all the details and a look at several other models available in the Azure AI Model Catalog.

We continue to advance our Azure OpenAI Service and recently launched several multimodal AI capabilities that empower businesses to build generative AI experiences with image, text and video, including:

- DALL·E 3, in preview: Generate images from text descriptions. DALL·E 3 is a remarkable AI model that does just that. Users describe an image, and DALL·E 3 will be able to create it.

- GPT-3.5 Turbo model with a 16k token prompt length, generally available, and GPT-4 Turbo, in preview: The latest models in Azure OpenAI Service enable customers to extend prompt length and bring more control and efficiency to their generative AI applications.

- GPT-4 Turbo with Vision (GPT-4V), in preview: When integrated with Azure AI Vision, GPT-4V enhances experiences by allowing the inclusion of images or videos along with text for generating text output, benefiting from Azure AI Vision enhancement like video analysis.

- Fine-tuning of Azure OpenAI Service models: Fine-tuning is now generally available for Azure OpenAI Service models including Babbage-002, Davinci-002, and GPT-35-Turbo. Developers and data scientists can customize these Azure OpenAI Service models for specific tasks. Learn more about fine-tuning here.

- GPT-4 updates: Azure OpenAI Service has also rolled out updates to GPT-4, including the ability for fine-tuning. Fine-tuning will allow organizations to customize the AI model to better suit their specific needs. It’s akin to tailoring a suit to fit perfectly, but in the world of AI. Updates to GPT-4 are in preview.

Steering at the frontier: Extending the power of prompting

The power of prompting in GPT-4 continues to amaze! A recent Microsoft Research blog discusses promptbase, an approach to prompting GPT-4 that harnesses its powerful reasoning abilities. Across a wide variety of test sets (including the ones used to benchmark the recently announced Gemini Ultra), GPT-4 gets better results than other AI models (including Gemini Ultra). And it does this with zero-shot chain-of-thought prompting. Read more in the blog post and these resources are available on GitHub to try right now.

Infuse Responsible AI (RAI) tools and practices in your LLMOps

As AI adoption matures and companies put their AI apps into production, it’s important to consider the safety boundaries supporting the short- and long-term ROI of those applications. This month, we continued our series on LLMOps for business leaders with an article focused on how to infuse responsible AI into your AI development lifecycle. We highlight best practices and tools in Azure AI Studio to help development teams put their principles into practice.

Azure AI Advantage offer

Azure Cosmos DB is the cloud database for the Era of AI. It supports built-in AI, including natural language queries, AI vector search capabilities, and simple AI integration with Azure AI Search. We’re investing in helping customers discover these benefits with our new Azure AI Advantage offer, which helps new and existing Azure AI and GitHub Copilot customers save when using Azure Cosmos DB by providing 40,000 Request Units per second (RU/s) of Azure Cosmos DB for 90 days.

What’s new in the Azure data platform—because AI is only as good as your data

Every intelligent app starts with data so a modern data and analytics platform is essential for any AI transformation.

One example of this how multinational law firm Clifford Chance leverages new technologies to benefit their clients. The firm built a solid data platform on Azure to innovate with new technologies, including the new generation of large language models, Azure OpenAI and Microsoft 365 Copilot. Early innovations are already delivering value with cognitive translation proving to be one of the fastest growing products their IT team has ever released.

And Belfius, a Belgian insurance company, built on the Microsoft intelligent data platform using services like Azure Machine Learning and Azure Databricks to reduce development time, increase efficiency, and gain reliability. As a result, their data scientists can focus on creating and transforming features and the company can better detect fraud and money laundering.

Azure and Databricks: Co-innovation for powerful AI experiences

At Microsoft Ignite 2023 in November the benefits of maturing AI tools and services were in full view as customers and partners shared how Microsoft is empowering them to achieve more.

Databricks is of one of our most strategic partners with some of the fastest growing data services on Azure. We recently showcased our co-innovation, including Azure Databricks interoperability with Microsoft Fabric, and how Azure Databricks is taking advantage of Azure OpenAI to deliver AI experiences for Azure Databricks’ customers. This means customers can take advantage of LLMs in Azure OpenAI as they build AI capabilities like retrieval-augmented generation (RAG) applications on Azure Databricks, and then use Power BI in Fabric to analyze the output.

If you missed Databricks’ CEO Ali Ghodsi on stage with Scott Guthrie at Ignite, I encourage you to check out the replay of Scott’s full segment Microsoft Cloud in the era of AI. If you only have a few minutes, his wrap up on LinkedIn is a great option, too. It’s a helpful overview of how the Microsoft Cloud is uniquely positioned to empower customers to transform by building AI solutions and unlocking data insights using the same platform and services that power all of Microsoft’s comprehensive solutions.

And for a closer look at our latest work with Databricks, check out the click-thru version of the Modern Analytics with Microsoft Fabric and Azure Databricks DREAM Lab from Ignite.

Now in preview: Azure AI extension for Azure Database for PostgreSQL

The new Azure AI extension allows developers to leverage large language models (LLMs) in Azure OpenAI to generate vector embeddings and build rich, PostgreSQL generative AI applications. These powerful new capabilities combined with existing support for the pgvector extension, make Azure Database for PostgreSQL another great destination for building AI-powered apps. Learn more.

Azure Arc brings cloud innovation to SQL Server anywhere

This month, we’re introducing a new set of enhanced manageability and security capabilities from SQL Server enabled by Azure Arc. With Monitoring for SQL Server, customers can gain critical insights into their entire SQL Server estate and optimize for database performance. Customers can also view and manage Always On availability groups, failover cluster instances, and backups directly from the Azure portal, with better visibility and simplicity. Lastly, with Extended Security Updates as a service and automated patching, customers can always keep their apps secure, compliant, and up to date at all times. Learn more.

Lower pricing for Azure SQL Database Hyperscale compute

New pricing on Azure SQL Database Hyperscale offers cloud-native workloads the performance and security of Azure SQL at the price of commercial open-source databases. Hyperscale customers can save up to 35% on the compute resources they need to build scalable, AI-ready cloud applications of any size and I/O requirement. The new pricing is now available. Learn more.

Digital applications deliver transformational operations and experiences

This era of AI is brought to life through digital applications developed and deployed by companies putting AI to work to enhance their operations and experiences—like a personalized app experience for employees or a customized chatbot for end customers. Here are some recent updates that help make all this innovation possible.

The seven pillars of modern AI development: Leaning into the era of custom copilots

Copilots are generating a lot of excitement and with Azure AI Studio in public preview, developers have a platform purpose-built for generative AI application development. As we lean into this new era, it’s important for businesses to carefully consider how to design a durable, adaptable, and effective approach. How can AI developers ensure their solutions enhance customer engagement? Here are seven pillars to think through when building your custom copilot.

AKS is a leading platform for modern cloud native and intelligent applications

The future of app development is at the intersection of AI and cloud-native technologies like Kubernetes. Cloud-native and AI are deeply rooted together in fueling innovation at scale and Azure Kubernetes Service (AKS) provides the scale customers need to run their compute intensive workloads like AI and machine learning. Check out Brendan Burn’s recent blog from KubeCon to learn how Microsoft is building and servicing open-source communities that benefit our customers.

There are no limits to your innovation with Azure

It has been amazing to see the tech community and customer response to our recent news, resources, and features across the portfolio, particularly digital applications.

Resources like the Platform Engineering Guide launched in with Ignite have been incredibly popular, demonstrating the demand and appetite for this kind of training material.

Seeing how organizations innovate with the technology is what it’s all about.

Here are a couple of customer stories that caught my attention recently.

Modernizing interactive experiences across LEGO House with Azure Kubernetes Service

We’re collaborating with The LEGO House in Denmark—the ultimate LEGO experience center for children and adults—to migrate custom-built interactive digital experiences from an aging on-prem data center to Microsoft Azure Kubernetes Service (AKS) to improve stability, security, and the ability to iterate and collaborate on new guest experiences. This shift to the cloud enables LEGO House to more quickly update these experiences as they learn from guests. As the destination modernizes it hopes to share learnings and technologies with the broader LEGO Group ecosystem, like LEGOLAND and brand retail stores.

Gluwa chose Azure for a reliable, scalable cloud solution to bring banking to emerging, underserved markets and close the financial gap

An estimated 1.4 billion people lack access to basic financial services because they live in a country with limited financial infrastructure, making it difficult to get credit or personal and business loans. Gluwa with Creditcoin uses blockchain technology to differentiate their business through borderless financial technology and chose Azure as the foundation to support it. Using a wide combination of our services and solutions—.NET framework, Azure Container Instances, AKS, Azure SQL, Azure Cosmos DB, and more—Gluwa has a strong platform to support their offerings. The business has also boosted operational efficiency with reliable uptime, stable services, and rich product offerings.

CARIAD creates a service platform for Volkswagen Group vehicles with Azure and AKS

With the automotive industry shifting to software defined vehicles, CARIAD, the Volkswagen Group software subsidiary, collaborated with Microsoft using Azure and AKS to create the CARIAD Service Platform for providing automotive applications to brands like Audi, Porsche, Volkswagen, Seat and Skoda. This platform powers and accelerates the development and service of vehicle software by CARIAD’s developers, helping software become an advantage for the Volkswagen Group in the next generation of automotive mobility.

DICK’S Sporting Goods creates an omnichannel customer experience using Azure Arc and AKS

To create a more consistent, personalized experience for customers across its 850 stores and its online retail experience, DICK’S Sporting Goods envisioned a “one store” technology strategy with the ability to write, deploy, manage, and monitor its store software across all locations nationwide—and reflect those same experiences through its eCommerce site. DICK’S needed a new level of modularity, integration, and simplicity to seamlessly connect its public cloud environment with its computing systems at the edge. With the help of Microsoft, DICK’s Sporting Goods is migrating its on-premise infrastructure to Microsoft Azure and creating an adaptive cloud environment comprised of Azure Arc and Azure Kubernetes Service (AKS). Now the retailer can easily deploy new applications to every store to support a ubiquitous experience.

Azure Cobalt delivers performance and efficiency for intelligent applications

Azure provides hundreds of services supporting the performance demands of cloud native and intelligent applications. Our work to maximize performance and efficiency now extends to the silicon powering Azure. We recently introduced Azure Maia, our first custom AI accelerator series to run cloud-based training and inferencing for AI workloads, and our custom in-house CPU series, Azure Cobalt, the first CPU designed by us, specifically for the Microsoft Cloud.

Cobalt 100, the first generation in the series, is a 64-bit 128-core chip that delivers up to 40% performance improvement over current generations of Azure Arm chips and can power services such as Microsoft Teams and Azure SQL.

With Cobalt, we will deliver performance and economics to meet the demands of resource-intensive workloads like intelligent applications using generative AI for at-scale workloads. Our customized silicon and system features include dynamic power capabilities that can be tuned per-core based on the workload, leveraging the co-optimization of hardware with software to deliver best-in-class performance efficiency. Check out Omar Khan’s blog, Microsoft Azure delivers purpose-built cloud infrastructure in the era of AI, for more on how we’re redefining cloud infrastructure from systems to silicon to maximize the era of AI.

Don’t just take it from me and Omar. Rani Borkar, CVP, Azure Hardware Systems shares how Cobalt delivers differentiated performance—and can even help drive toward achieving sustainability goals:

“We’re building sustainability into every part of our hardware for the cloud, from our silicon to the servers. This starts in the design phase, and on Cobalt we made those intentional design choices to be able to control performance and power consumption per core and on every single VM. We’re pleased with the performance we’ve seen testing Cobalt on internal workloads like Teams and Azure SQL, and looking forward to rolling this out more widely to customers as a VM offering next year.”

Grow your data, AI, and intelligent applications understanding and skillset

I often use December downtime to do a deep dive into a new technology or learn a new skill. I’m a data geek, and I have fond memories from a few years ago when I worked through more than a dozen Power BI trainings and loved every minute of it. Demystifying AI and helping customers build the right skillsets is just one way Microsoft is empowering AI transformation. Here are some new learning resources if you are like me and plan to end the year learning something new!

AI in a Minute: Looking for help ramping your teams on generative AI? Or maybe you want to go to end of year gatherings prepared to discuss AI and its possibilities? Our new “AI in a Minute” video series explains generative AI basics in short, snackable bites anyone can digest, regardless of job tile, level, or industry.

GenAI for beginners: This free 12-lesson course is designed to teach beginners everything they need to know to start building with generative AI.

Microsoft Learn Cloud Skills Challenge—Microsoft Ignite Edition: Skill up for in-demand Data & AI tech scenarios and enter to win a VIP pass to the next Microsoft Ignite or Microsoft Build by completing a challenge by January 15, 2024.

Cloud Workshop for the SQL Professional—If you missed this workshop at the PASS Data Summit, the labs are available to go through at your own pace. Our next workshop will be in March at the Microsoft Fabric Community Conference, so be sure to register and come see us in Las Vegas.

Accelerate your AI journey with key solution accelerators—Get started building intelligent apps on Azure with newly-published demos, GitHub repos, and Hackathon content to create AI-powered, intelligent apps. Customers and partners can “Build Your Own Copilot” using a click-through demo, sellers and partners can run a Hackathon with customers, and developers can leverage a code repo to quickly build solutions in their Azure account. Get started here: Build Modern AI Apps, Hackathon, Vector search AI Assistant.

What will you build in 2024? Transform your business with a trusted partner

The ability of AI to accelerate transformation across industries, organizations, and daily life will certainly continue at an intense pace in 2024. Microsoft is proud to be your trusted partner in this era of AI and we’re committed to helping you achieve more for your business. I am excited to see how data, AI, and digital applications innovation unfolds for your business in the new year!