Google has announced a new project that aims to leverage generative AI to build contextually relevant UIs.

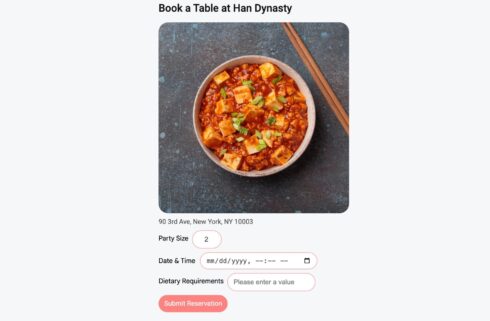

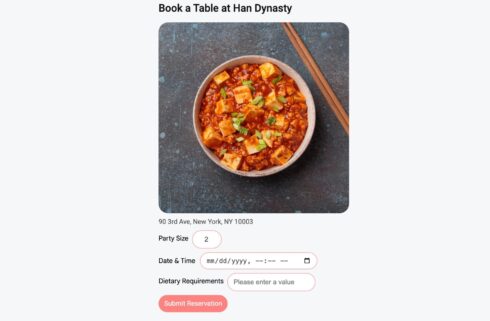

A2UI is an open source tool that generates UIs based on the current conversation’s needs. For example, an agent designed to help users book restaurant reservations would be more useful if it featured an interface to input the party size, date and time, and dietary requirements, rather than the user and agent going back and forth discussing that information in a regular conversation. In this scenario, A2UI can help generate a UI with input fields for the necessary information to complete a reservation.

“With A2UI, LLMs can compose bespoke UIs from a catalog of widgets to provide a graphical, beautiful, easy to use interface for the exact task at hand,” Google wrote in a blog post.

Google had previously created the Agent-to-Agent (A2A) protocol to allow agents to collaborate without needing to share memory, tools, or context, however the decentralization that it creates leads to a UI problem. When an agent lives in an application, it can directly manipulate the view layer, but in multi-agent systems, the agent is often executing tasks remotely and cannot directly influence the UI.

“Historically, rendering UI from a remote, untrusted source meant sending HTML or JavaScript and sandboxing it inside iframes. This approach is heavy, can be visually disjointed (it rarely matches your app’s native styling), and introduces complexity around security boundaries. We needed a way to transmit UI that is safe like data, but expressive like code,” the company explained.

A2UI offers a standard declarative data format for agents to generate structured output that can then be sent to the client application, which renders it using its own native UI components, retaining full control over styling and security.

According to Google, this helps eliminate security risks, as the client is not getting executable code from the agent. The client application maintains a catalog of trusted UI components and the agent can only request to render components already in that catalog.

Additionally, the UI is represented as a list of components with ID references that the agent can generate incrementally, enabling progressive rendering and a responsive user experience.

Finally, it is designed to be framework-agnostic, separating the UI structure from the UI implementation. The agent sends a description of the component tree and data model and then the client application maps those to its own components. This allows the same A2UI JSON payload to be rendered in multiple different clients built using different frameworks.

“The space for agentic UI is evolving rapidly, with excellent tools emerging to solve different parts of the stack. We view A2UI not as a replacement for these frameworks, but as a specialized protocol that aims to solve the specific problem of interoperable, cross-platform, generative or template-based responses,” Google wrote.