Parameter-efficient fine-tuning or PeFT methods seek to adapt large language models via updates to a small number of weights. However, a majority of existing interpretability work has demonstrated that representations encode semantic rich information, suggesting that it might be a better and more powerful alternative to edit these representations. Pre-trained large models are often fine tuned to be used for new domains or tasks, and during the fine-tuning process, a single base model can be adapted to a wide variety of tasks even with only small amounts of in-domain data available to the model. However, the process of fine-tuning an entire model is resource-consuming, and expensive, especially for language models with a significantly higher number of size and parameters.

Parameter-efficient fine-tuning or PeFT methods propose to tackle the high costs associated with fine-tuning the whole model by updating only a small amount of the total weights available, a process that helps in reducing training time along with memory usage. What’s more important is that Parameter-efficient fine-tuning or PeFT methods have demonstrated similar performance to finetune in several practical settings. Adapters, a common family of Parameter-efficient fine-tuning or PeFT methods, learn an edit that can be added to an additional set of weights that operate alongside the frozen base model, with recent adapters like LoRA reduce the number of trainable parameters in learned weight updates by using low-rank approximations instead of full-weight matrices when training the adapters.

With previous works demonstrating editing representations might be a better alternative to Parameter-efficient fine-tuning or PeFT methods, in this article, we will be talking about Representation Fine-tuning or ReFT methods that operate on a frozen model, and learn task-specific interventions on hidden representations. This article aims to cover the ReFt or Representation Fine-tuning framework in depth, and we explore the mechanism, the methodology, the architecture of the framework along with its comparison with state of the art frameworks. So let’s get started.

In an attempt to adopt pre-trained language models to new domains and tasks, current frameworks fine-tune these pre-trained language models frequently as with the fine-tuning process implemented, a single base model can be adapted to a variety of tasks even when working with a small amount of in-domain data. Although the fine-tuning process does boost the overall performance, it is an expensive process especially if the language model has a significantly high number of parameters. To tackle this issue, and reduce the associated costs, PeFT or Parameter-efficient fine-tuning frameworks update only a small fraction of the total weights, a process that not only reduces the training time, but also reduces the memory usage, allowing the PeFT frameworks to achieve similar performance when compared to full fine-tuning approaches in practical scenarios. Adapters, a common family of PeFTs, work by learning an edit that can be added to an additional set of weights along with a subset of weights that operate in unison with the base model with frozen weights. Recent adapter frameworks like LoRA and QLoRA have demonstrated that it is possible to train full-precision adapters on top of reduced precision models without affecting performance. Adapters are usually more efficient and effective when compared against other methods that introduce new model components.

A major highlight of current state of the art Parameter-efficient fine-tuning frameworks is that instead of modifying representations, they modify weights. However, frameworks dealing with interpretability have demonstrated that representations encode rich semantic information, suggesting that representations editing might be a better and a more powerful approach when compared to weight updates. This assumption of representations editing being the better approach is what forms the foundation of ReFT or Representation Fine-tuning framework that trains interventions instead of adapting model weights, allowing the model to manipulate a small fraction of all the representations in an attempt to steer model behaviors to solve downstream tasks during inference. ReFT or Representation Fine-tuning methods are drop-in replacements for weight-based PeFT or Parameter-efficient fine-tuning frameworks. The ReFT approach draws inspiration from recent models working with large model interpretability that intervenes on representations to find faithful causal mechanisms, and steers the behavior of the model during inference, and therefore can be seen as a generalization of the representation-editing models. Building on the same, LoReFT or Low-Rank Subspace ReFT is a strong and effective instance of ReFT, and is a parameterization of ReFT that intervenes on hidden representations in the linear space spanned by low-rank projection matrix, and builds directly on the DAS or Distributed Alignment Search framework.

Moving along, contrary to full fine-tuning, the PeFT or Parameter-efficient fine-tuning framework trains only a small fraction of the parameters of the model, and manages to adapt the model to downstream tasks. The Parameter-efficient fine-tuning framework can be classified into three main categories:

- Adapter-based methods: Adapter-based methods train additional modules like fully-connected layers on top of the pre-trained model with frozen weights. Series adapters insert components between the multilayer perceptron or MLP and LM or large model attention layers, whereas parallel adapters add modules alongside existing components. Since adapters add new components that can not be folded into existing model weights easily, they pose an additional burden during inference.

- LoRA: LoRA along with its recent variants approximate additive weights during training by using low-rank matrices, and they do not require additional overheads during inference since the weight updates can be merged into the model, and it’s the reason why they are considered to be the current strongest PeFT frameworks.

- Prompt-based methods: Prompt-based methods add soft tokens that are initialized randomly into the input, and train their embeddings while keeping the weights of the language model frozen. The performance offered by these methods are often not satisfactory when compared against other PeFT approaches, and they also carry a significant inference overhead cost.

Instead of updating the weights, the ReFT framework learns interventions to modify a small fraction of the total representations. Furthermore, recent works on representation engineering and activation steering have demonstrated that adding fixed steering vectors to the residual stream might facilitate a degree of control over pre-trained large model generations without requiring resource-intensive fine-tuning. Other frameworks have demonstrated that editing representations with a learned scaling and translation operation can attempt to match but not surpass the performance offered by LoRA adapters on a wide array of tasks with fewer learned parameters. Furthermore, the success of these frameworks across a range of tasks have demonstrated that representations introduced by pre-trained language models carry rich semantics, although the performance of these models is sub-optimal, resulting in PeFTs to continue as the state of the art approach with no additional inference burden.

ReFT : Methodology and Architecture

To keep the style preservation process simple, the ReFT framework assumes a transformer-based large model as its target model that is capable of producing contextualized representation of sequence of tokens. For a given sequence with n number of input tokens, the ReFT framework first embeds these input tokens into a list of representations following which the m layers compute the list of hidden representations successively as a function of the previous list of hidden representations. Each hidden representation is a vector, and the language model uses the final hidden representations to produce the predictions. The ReFT framework considers both masked language models and autoregressive language models. Now, according to the linear representation hypothesis, in neural networks, concepts are encoded within the linear subspaces of representations. Recent models have found this claim to be true in neural network models trained on natural language along with other input distributions.

Furthermore, in interpretability studies, the casual abstraction framework uses interchange interventions to establish the role of neural network components casually when implementing particular behaviors. The logic behind interchange intervention is that if one fixes a representation to what it would have been for a counterfactual input, and this intervention affects the output of the model consistently in the way that the claims made by the ReFT framework about the component responsible for producing that representation, then the component plays a causal role in the behavior. Although there are a few methods, distributed interchange intervention is the ideal approach to test whether a concept is encoded in a linear subspace of a representation, as claimed by the linear representation hypothesis. Furthermore, the DAS method has been used previously to find linear representation in language models of entity attributes, sentiment, linguistic features, and mathematical reasoning. However, several experiments have indicated that the DAS method is highly expressive, and it possesses the ability to find causal efficacious subspaces even when the transformer language model has been initialized randomly, and therefore is yet to learn any task-specific representations, resulting in the debate whether DAS is effective and responsible enough for interpretability tasks.

The expressivity offered by DAS suggests that the approach could be an ideal tool to control the behavior of the language model along with its work on controllable generation and responsible editing. Therefore, to adapt language models for downstream tasks, the ReFT framework uses the distributed interchange intervention operation to make a new parameter efficient method. Furthermore, the ReFT method is a set of interventions, and the framework enforces that for any two interventions that operate on the same layer, the intervention positions must be disjoint, with the parameters of all intervention functions remaining independent. As a result, the ReFT is a generic framework that encompasses interventions on hidden representations during the model forward pass.

ReFT: Experiments and Results

To evaluate its performance against existing PEFT frameworks, the ReFT framework conducts experiments across four diverse natural language processing benchmarks, and covers over 20 datasets, with the primary goal being to provide a rich picture of how the LoReFT framework performs in different scenarios. Furthermore, when the LoReFT framework is implemented in real life, developers need to decide on how many interventions to learn along with the input positions and layers to apply each one on. To complete the task, the ReFT framework tunes four hyperparameters.

- The number of prefix positions to intervene on.

- The number of suffix positions to intervene on.

- What set of layers to intervene on.

- Whether or not to tie intervention parameters across different positions in the same layer.

By doing this, the ReFT framework simplifies the hyperparameter search space, and ensures only a fixed additional inference cost that does not scale with the length of the prompt.

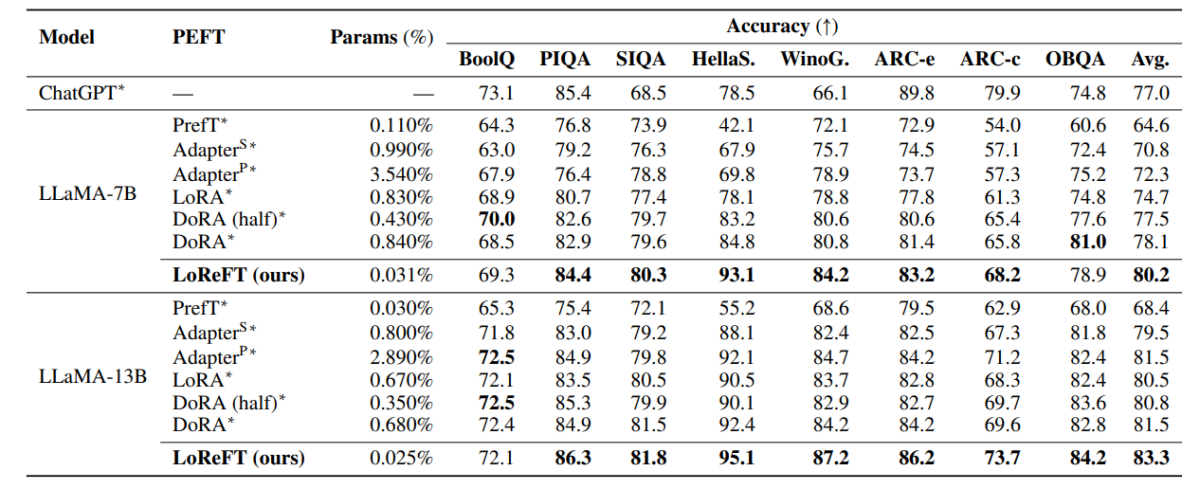

The above table compares the accuracy of the LLaMA-7B and LLaMA-13B frameworks against existing PEFT models across 8 commonsense reasoning dataset. As it can be observed, the LoReFT model outperforms existing PEFT approaches by a decent margin, despite having much fewer parameters, with the average performance of three runs being reported with distinct parameter seeds for the LoReFT model. The param(%) is calculated by dividing the number of trainable parameters with the number of total parameters of the base large model.

The above table summarizes the accuracy comparison of the LLaMA-7B and LLaMA-13B frameworks against existing PEFT models across 4 different arithmetic reasoning datasets, with the framework reporting the average performance of three runs with distinct random seeds. As it can be observed, despite having much fewer params(%), the LoReFT framework outperforms existing PEFT frameworks by a considerable margin.

The above table summarizes the accuracy comparison of the RoBERTa-base and RoBERTa-large frameworks against existing PEFT models across the GLUE benchmark, with the framework reporting the average performance of five runs with distinct random seeds. As it can be observed, despite having much fewer params(%), the LoReFT framework outperforms existing PEFT frameworks by a considerable margin.

Final Thoughts

In this article, we have talked about LoReFT, a powerful alternative to existing PEFT frameworks that achieves strong performance across benchmarks from four different domains while offering up to 50 times the efficiency offered by previous state of the art PEFT models. Pre-trained large models are often fine tuned to be used for new domains or tasks, and during the fine-tuning process, a single base model can be adapted to a wide variety of tasks even with only small amounts of in-domain data available to the model. However, the process of fine-tuning an entire model is resource-consuming, and expensive, especially for language models with a significantly higher number of size and parameters. Parameter-efficient fine-tuning or PeFT methods propose to tackle the high costs associated with fine-tuning the whole model by updating only a small amount of the total weights available, a process that helps in reducing training time along with memory usage. Notably, LoReFT establishes new state-of-the-art performance on commonsense reasoning, instruction-following, and natural language understanding against the strongest PEFTs.